Imagine for a moment that an out-of-control streetcar is hurtling toward five people. Sitting at the controls, the driver can do nothing and assume those five individuals will die. He can though flip a switch and instead hit a single person.

Doing nothing results in five fatalities. Acting will cause one person’s death. What to do?

It might all depend on where you live.

But first, the reason we need to know.

Autonomous Vehicles

A group at MIT wanted to identify what people believe is ethical because self-driving vehicles will have to make decisions. Yes, most involve the everyday traffic rules we (are supposed to) observe. However, especially in emergencies, some will be about life and death. A machine will have to decide whether to strike someone who is old or young. It could have to choose between harming men or women, more or fewer people, animals or humans. An autonomous vehicle can act or do nothing. .

The Study

To see the ethical driving decisions that should be made by self-driving vehicles, those MIT researchers created a survey. Called the Moral Machine, 13 scenarios were presented to millions of people in 233 countries. All had situations in which someone had to die. Their task was deciding who.

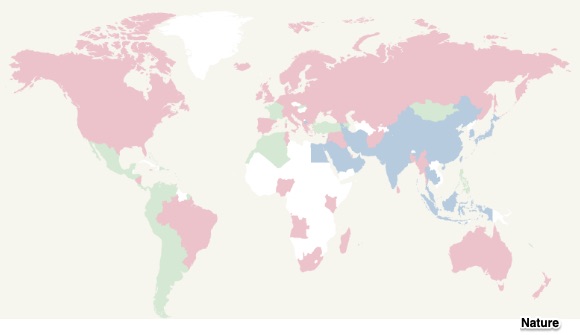

The geography of the Moral Machine:

The Results

Among the participants, there was general agreement about three moral dilemmas:

- humans over animals

- save many rather than a few

- preserve the young instead of the old

However, it wasn’t quite that easy. Moving from general preferences to specific answers, researchers uncovered much more division.

Geographically, the responses to the Moral Machine questions divided into three groups:

- The Western Cluster: Values that related to Christianity characterized the answers from North America and some of Europe.

- The Eastern Cluster: People with a Confucian or Islamic heritage from places that included Japan, Indonesia, and Pakistan tended to have similar opinions.

- The Southern Cluster: The third group, not explicitly tied to specific religious roots, was from Central and South America, France, and former French colonies.

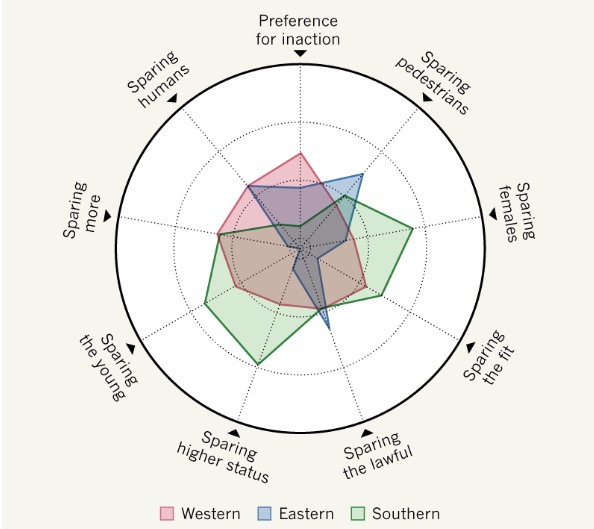

Nature Magazine illustrated the differences through a moral compass:

In addition to geography and religion, ethics varied by gender, age, education, income, and politics. Institutions mattered. In more affluent countries with established institutions (like Finland and Japan), survey participants were more likely to condemn an illegal jaywalker. As for age, respondents in the Southern Cluster tended to spare the young rather than the aged.

Our Bottom Line: Externalities

For self-driving cars, ethical programming has become a reality. Necessitating countless choices, the decisions will create externalities. Defined as the impact on an uninvolved third party, the externality can be positive or negative.

Now though we can see that what people call positive or negative will vary.

I wonder if that means having self-driving cars with a Western, an Eastern, or a Southern personality.

My sources and more: I recommend taking a look at Nature’s article on The Moral Machine Experiment. From there, you might also enjoy this 99% Invisible podcast and this German Ethics Commission report, And do be sure to take MIT’s moral machine quiz.