Flying to Paris from Rio de Janeiro during May 2009, Flight 447 suddenly disappeared. Two years later when the black box was found, investigators concluded that the problem was an automation malfunction that necessitated human intervention. The pilot though did not know how to respond.

Where are we going? To the dilemmas that automation creates.

The Automation Paradox

Automation has made aviation safer by minimizing human error. Also though, because it minimizes what humans have to know, it maximizes the chance for error when it fails.

On Flight 447, the captain, a veteran with more than 11,000 miles had flown many kinds of airplanes. But his co-pilot was the “Pilot Flying” for that trip. Having started his career with Air France, he was used to switching on the autopilot four minutes after take-off. The copilot’s 2,936 hours in the air did little to develop his flying expertise. Consequently, when the auto pilot failed and air speed and altitude indicators went haywire at 35,000 feet, the copilot was unsure how to fly the plane.

Airplane manufacturers rightly point out that automation has prevented countless accidents. Reducing the chance for human error, the automated cockpit has enhanced aviation safety. The problem though is what to do when it fails. How to train pilots for something that may never happen during an entire flying career?

And therein lies the paradox. The less humans need to know because of automation, the more they should know.

Our Bottom Line: Human Capital

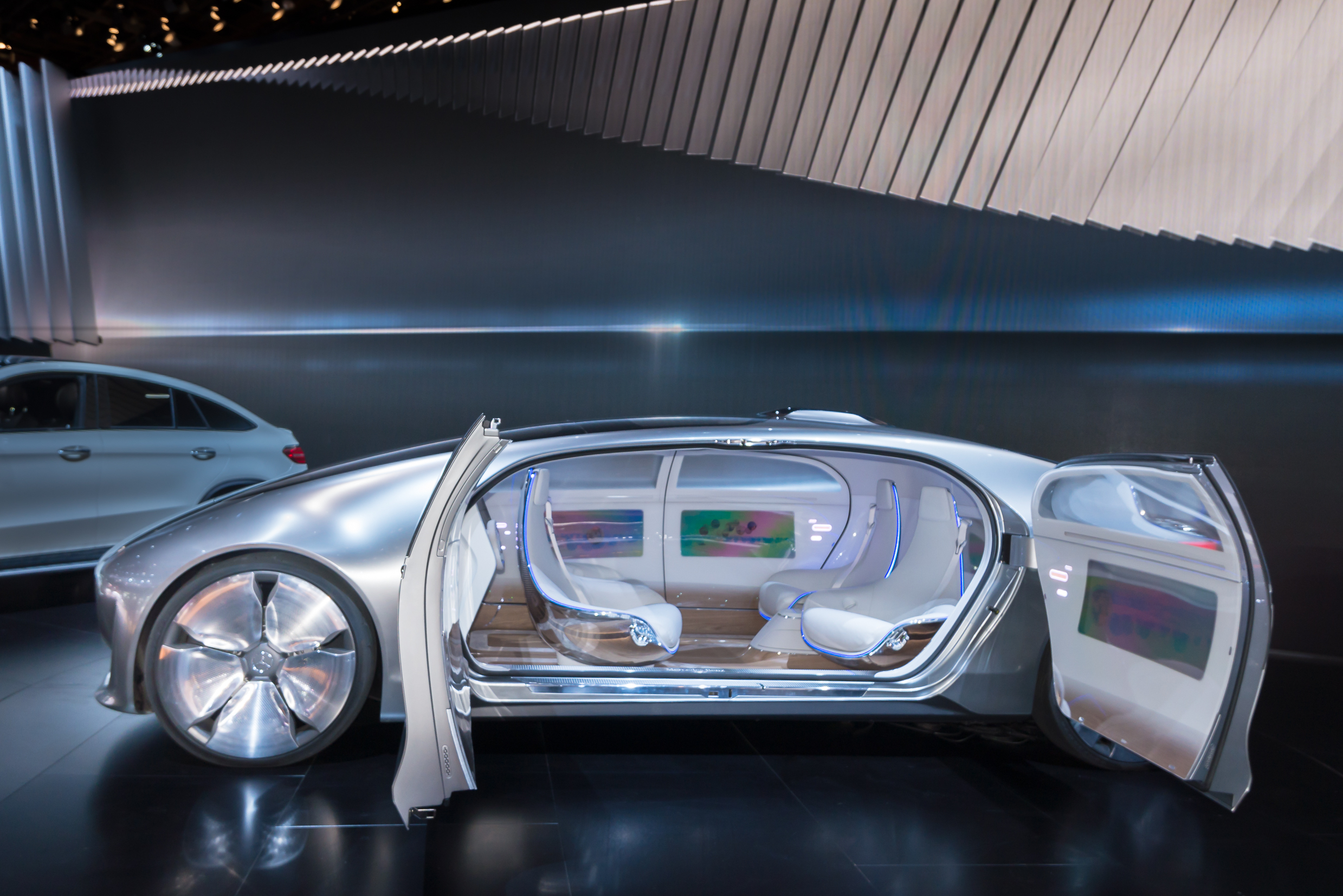

In the air and in the factory, in planes and cars, the more automated a system, the less people need to know and do. And yet, as systems become more automated it is increasingly crucial that human capital grasp them. As with Flight 447, any error will multiply until it is fixed or becomes fatal.